Introduction

In this article, we will certainly go over the fundamental principles of Logistic Regression as well as what type of issues can it aid us to address.

Logistic regression is a category formula made use of to appoint monitorings to a distinct collection of courses. Several of the instances of category troubles are Email spam or otherwise spam, Online purchases Fraud or otherwise Fraud, Tumor Benign or malignant. Logistic regression changes its result making use of the logistic sigmoid function to return a likelihood worth.

What are the sorts of logistic regression

Binary (eg. Tumor Benign or malignant).

Multi-linear features fall short a course (eg. Pet cats, pets or Sheep).

Logistic Regression.

Logistic Regression is a Machine Learning formula which is made use of for the category issues, it is an anticipating evaluation formula as well as based upon the idea of possibility.

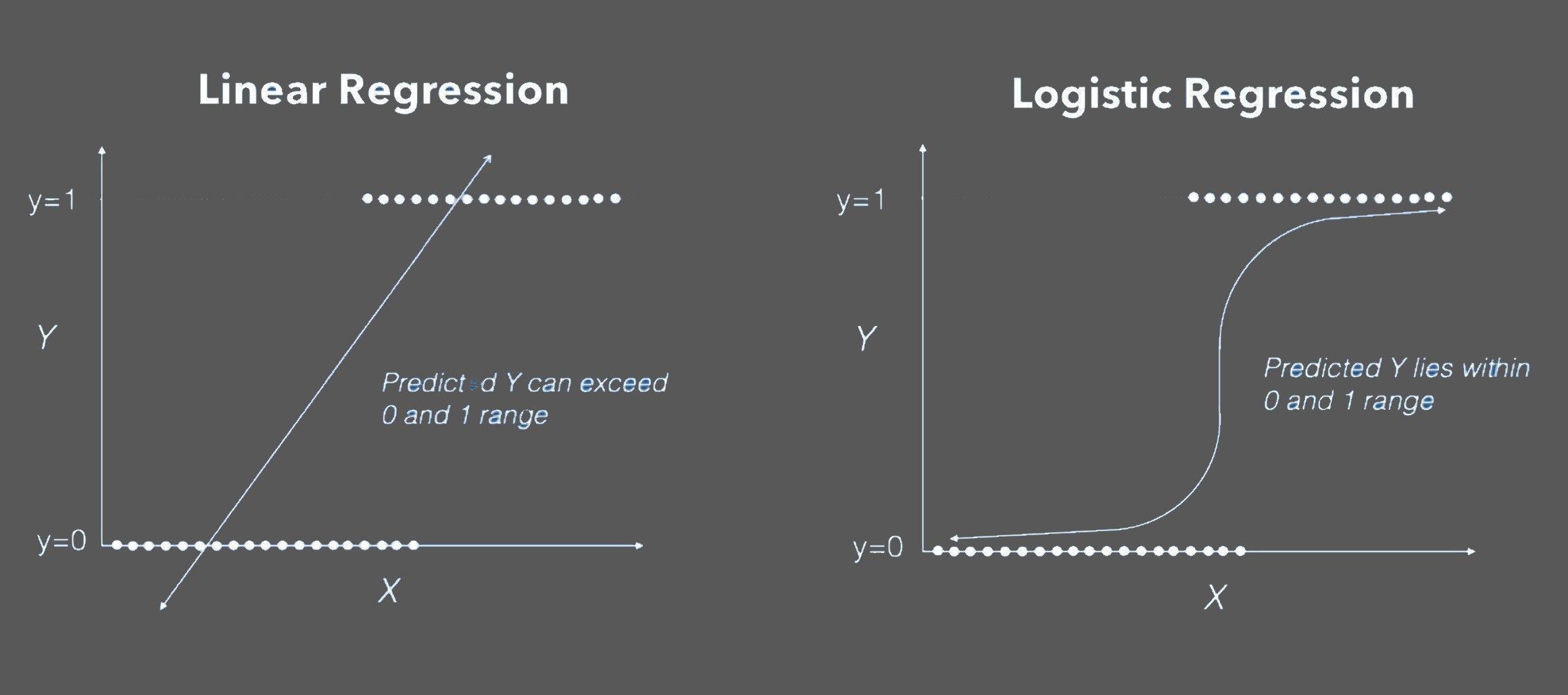

Linear Regression VS Logistic Regression Graph

We can call a Logistic Regression a Linear Regression version yet the Logistic Regression makes use of a much more intricate price function, this expense function can be specified as the 'Sigmoid function' or additionally referred to as the 'logistic function' rather than a linear function.

The hypothesis of logistic regression has a tendency to restrict the price function in between 0 as well as 1. Consequently linear features fall short to represent it as it can have a worth higher than 1 or much less than 0 which is not feasible according to the hypothesis of logistic regression.

What is the Sigmoid Function?

In order to map anticipated worths to likelihoods, we make use of the Sigmoid function. The function maps any kind of actual worth right into one more worth in between 0 as well as 1. In artificial intelligence, we utilize sigmoid to map forecasts to chances.

Hypothesis Representation.

When utilizing linear regression we made use of a formula of the hypothesis i.e.

hΘ( x) = β ₀ + β ₁ X.

For logistic regression we are mosting likely to customize it a bit i.e.

σ( Z) = σ( β ₀ + β ₁ X).

We have actually anticipated that our hypothesis will certainly provide worths in between 0 as well as 1.

Z = β ₀ + β ₁ X.

hΘ( x) = sigmoid( Z).

i.e. hΘ( x) = 1/( 1 + e ^-( β ₀ + β ₁ X).

Choice Boundary.

We anticipate our classifier to offer us a collection of courses or results based upon possibility when we pass the inputs via a forecast function as well as returns a possibility rating in between 0 as well as 1.

As an example, We have 2 courses, allows make them like pets and also pet cats( 1-- canine, 0-- pet cats). We essentially make a decision with a limit worth over which we classify worth’s right into Class 1 as well as of the worth goes listed below the limit after that we classify it in Class 2.

As received the above chart we have actually picked the limit as 0.5, if the forecast function returned a worth of 0.7 after that we would certainly classify this monitoring as Class 1( DOG). After that we would certainly classify the monitoring as Class 2( CAT), if our forecast returned a worth of 0.2.

Expense Function.

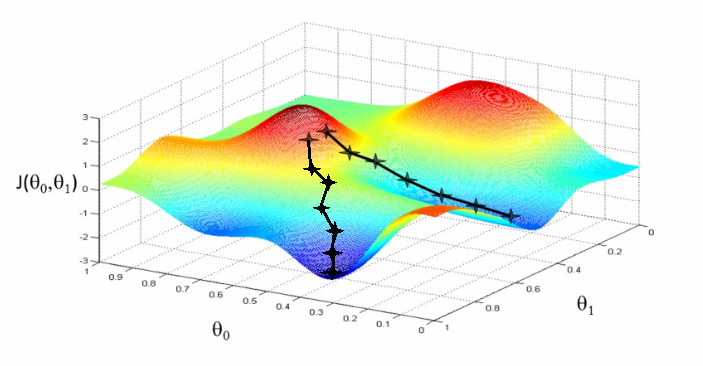

We discovered the price function J( θ) in the Linear regression, the expense function stands for optimization unbiased i.e. we develop a price function and also minimize it to ensure that we can establish an exact version with minimal mistake.

If we attempt to utilize the price function of the linear regression in 'Logistic Regression' after that it would certainly be unusable as it would certainly wind up being a non-convex function with numerous neighbourhood minimums, in which it would certainly be extremely challenging to minimize the expense worth and also discover the international minimum.

For logistic regression, the Cost function is specified as:.

If y = 1, − log( hθ( x)).

If y = 0, − log( 1 − hθ( x)).

The over 2 features can be pressed right into a solitary function i.e.

Above features pressed right into one expense function.

Currently the concern emerges, just how do we minimize the price worth. The primary objective of Gradient descent is to minimize the expense worth.

Currently to minimize our expense function we require to run the gradient descent function on each parameter i.e.

Gradient Descent Simplified Picture

Gradient descent has an example in which we need to envision ourselves on top of a hill valley and also left stranded as well as blindfolded, our purpose is to get to all-time low of capital. Really feeling the incline of the surface around you is what everybody would certainly do. Well, this activity is similar to computing the gradient descent, and also taking an action is comparable to one version of the upgrade to the criteria.

Final thought.

I have provided you with the fundamental idea of Logistic Regression. I wish this article was handy and also would certainly have encouraged you sufficient to obtain curious about the subject.

No comments:

Post a Comment